Insight Wave: Revolutionizing Textile Manufacturing with AI

In today’s fiercely competitive textile industry, innovation isn’t just a luxury—it’s a necessity. As manufacturers continuously seek ways to enhance efficiency, quality, and profitability, Insight Mind Matrix is proud to introduce Insight Wave, an advanced AI-powered application designed to transform traditional textile manufacturing processes into streamlined efficiency and operational excellence models. A New Era in Textile Manufacturing Insight Wave leverages cutting-edge artificial intelligence to address longstanding challenges in the textile sector. By integrating sophisticated machine learning algorithms with real-time data analytics, Insight Wave empowers manufacturers to make proactive decisions that drive productivity, optimize resources, and ensure the highest quality standards. AI-Driven Predictive Maintenance Downtime is the enemy of productivity. Traditional reactive maintenance often results in prolonged interruptions and costly repairs. With Insight Wave’s Predictive Maintenance capabilities, manufacturers can now predict equipment failures before they occur. The system detects early warning signs of wear and tear by continuously monitoring machinery performance through AI-driven analytics, enabling timely interventions. This proactive approach not only minimizes downtime but also extends the lifespan of valuable assets, ultimately boosting overall productivity. Uncompromising Quality Enhancement Quality is the cornerstone of textile manufacturing. Flawless textiles are not achieved by chance—they are the result of meticulous quality control processes. Insight Wave utilizes advanced image recognition and data analysis to perform AI-driven Quality Control. This technology inspects textiles in real time, identifying defects or inconsistencies that might escape traditional inspection methods. By ensuring that every fabric meets stringent quality standards, manufacturers can enhance brand reputation and customer satisfaction, all while reducing waste and rework costs. Optimized Resource Management Efficient resource utilization is critical in an industry where margins are often slim. Insight Wave’s intelligent algorithms offer Resource Optimization by analyzing production data and identifying areas where energy, raw materials, and labor can be used more efficiently. This optimization not only translates to lower operational costs but also contributes to a more sustainable manufacturing process, reducing the environmental footprint of textile production. The system’s ability to balance resource allocation in real time ensures that production lines are operating at peak efficiency, delivering higher profitability and competitive advantage. Transforming Challenges into Opportunities The textile industry faces numerous challenges—from increasing demand for sustainability to the need for rapid adaptation in a dynamic market. Insight Wave transforms these challenges into opportunities by: Step Into the Future of Textiles Insight Wave is not just a technological upgrade; it’s a comprehensive solution designed for textile manufacturers who are ready to embrace the future. By integrating AI into every aspect of production, manufacturers can achieve unprecedented levels of efficiency, quality, and sustainability. This is your opportunity to think beyond limits, reinvent your manufacturing process, and position your business at the forefront of the industry. Ready to innovate? Let’s connect and explore how Insight Wave can redefine your textile production strategy.

textile industry, Leading strategic intelligence solutions for the apparel industry

textile industry, In a rapidly changing business environment, it is crucial to identify sector-specific themes and highlight strategic issues shaping the future of your industry. The apparel industry, known for its dynamism and trend-driven nature, is a prime example of a sector that can benefit immensely from strategic intelligence solutions. Businesses that fail to invest in these areas risk falling behind competitors and losing valuable growth opportunities. The Importance of Strategic Intelligence in the Apparel Industry Strategic intelligence plans must be designed not only to address the current business climate but also to align with long-term strategies spanning three to ten years. For the apparel industry, this means leveraging insights that help navigate market trends, competitive landscapes, and evolving consumer preferences. Strategic intelligence agencies are pivotal in guiding apparel businesses through the complexities of this fast-paced sector. Companies increasingly seek such intelligence to make informed decisions, improve market positioning, and drive sustainable growth. Efficiencies Delivered by Strategic Intelligence Solutions Leading strategic intelligence solutions offer numerous advantages, including: Investment in Strategic Intelligence: A Smart Commercial Move Investing in strategic intelligence is not merely an operational expense but a pathway to significant returns. Companies allocating resources to these solutions often find themselves better prepared to tackle market uncertainties and respond to competitive threats. Insights gained from strategic intelligence inform product development, marketing campaigns, and international expansion plans. Global Adoption of Strategic Intelligence Solutions The global apparel market has seen a surge in the adoption of strategic intelligence solutions, particularly as brands expand across borders. Region-specific intelligence is becoming increasingly critical to understanding cultural nuances, regulatory environments, and local market dynamics. Leading agencies provide the global insights necessary for successful international ventures. Market Forecasts and Trends The demand for strategic intelligence solutions in the apparel industry is expected to grow. Companies are placing greater emphasis on real-time data analysis and predictive modelling to anticipate trends and consumer behaviour. This trend underlines the increasing sophistication of tools and systems designed to provide actionable insights. Industry-Leading Solutions To remain competitive, apparel companies should explore industry-leading solutions, including: Technological Advances in Strategic Intelligence Technological innovations are transforming strategic intelligence solutions in the apparel sector. Key advances include: Conclusion Strategic intelligence agencies are indispensable for apparel companies aiming to thrive in a competitive business landscape. By offering actionable insights and forward-looking analysis, these agencies empower brands to make informed decisions that drive success. As the industry evolves, strategic intelligence will continue to play an integral role in shaping the future of the apparel business, ensuring that companies remain adaptable and competitive in a fast-changing world.

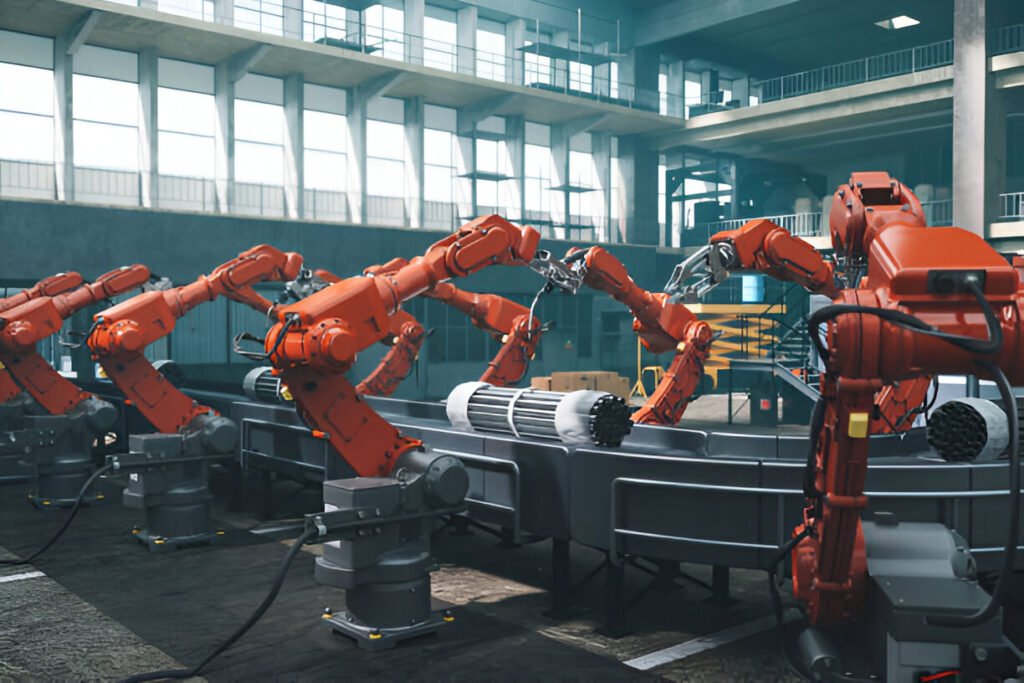

AI Manufacturing: Personalization at Scale with AI

AI Manufacturing, In an age of soaring consumer expectations, businesses face a critical challenge: how to deliver personalized products and services at scale without compromising efficiency or profitability. Enter artificial intelligence (AI), a transformative force driving the evolution of custom manufacturing and revolutionizing the way we think about production and personalization. The Demand for Personalization The modern consumer no longer settles for one-size-fits-all solutions. From bespoke clothing to tailored home décor, personalization has become a baseline expectation. According to a McKinsey report, 71% of consumers expect companies to deliver personalized interactions, and 76% get frustrated when this doesn’t happen. This demand is not limited to luxury markets; it permeates the automotive and healthcare industries. However, meeting this demand at scale has traditionally been a logistical and financial nightmare. Customization often requires unique tooling, specialized labor, and extended production timelines—all of which inflate costs. This is where AI steps in as the game-changer. How AI Powers Custom Manufacturing AI-driven technologies are enabling manufacturers to combine the efficiency of mass production with the uniqueness of bespoke craftsmanship. Here’s how: 1. Data-Driven Design AI systems can analyze vast consumer data to identify trends and preferences. For example, an AI algorithm can process data from social media, online reviews, and purchase histories to predict what designs, features, or colors a target audience will favor. This enables manufacturers to create products that feel uniquely personal while still appealing to broader market segments. 2. Generative Design and Prototyping AI-powered generative design tools allow manufacturers to create thousands of design iterations in a fraction of the time it would take human designers. These systems use constraints such as material type, cost, and functionality to generate optimal designs, speeding up the prototyping process and reducing time-to-market. 3. Smart Manufacturing Systems Advanced robotics and AI-powered machinery can adapt to produce customized items without requiring significant retooling. For instance, AI can dynamically adjust production parameters on the fly, enabling factories to switch between different product variations seamlessly. This eliminates the traditional inefficiencies associated with small-batch production. 4. Predictive Maintenance and Quality Control AI ensures that customization doesn’t come at the expense of quality. Machine learning algorithms can predict equipment failures before they occur, minimizing downtime. Similarly, AI-powered quality control systems use computer vision to identify defects in real time, ensuring that every custom product meets stringent quality standards. 5. Personalized Customer Experiences AI also enhances the customer experience. Interactive tools like virtual try-ons, product configurators, and AR/VR applications allow customers to visualize and customize their products in real time. AI algorithms then translate these preferences into precise manufacturing instructions, bridging the gap between imagination and production. Case Studies: AI in Action Nike By You Nike’s “Nike By You” platform leverages AI to offer customers the ability to design their sneakers. By integrating AI into the design and manufacturing process, Nike can deliver personalized footwear with a turnaround time that was unthinkable just a decade ago. Tesla’s Customization Tesla uses AI to streamline the customization of its electric vehicles. From choosing interior features to tailoring performance metrics, customers have extensive options, all facilitated by AI-driven manufacturing systems that adapt in real-time. The Road Ahead As AI continues to advance, the possibilities for custom manufacturing will only expand. Emerging technologies like AI-driven 3D printing, digital twins, and blockchain for supply chain transparency are set to redefine what’s possible. Shortly, consumers may be able to co-create products with AI systems, blurring the lines between designer, manufacturer, and end-user. Challenges to Overcome Despite its potential, custom manufacturing with AI is not without challenges. Data privacy concerns, the high cost of implementing AI technologies, and the need for skilled workers to manage these systems remain significant barriers. Companies must also navigate the ethical implications of AI-driven personalization, ensuring that algorithms do not inadvertently reinforce biases or exclude certain consumer groups. Conclusion AI is empowering manufacturers to deliver personalization at an unprecedented scale, transforming the custom manufacturing landscape. As businesses embrace these technologies, they’ll not only meet the growing demand for bespoke products but also unlock new levels of efficiency, creativity, and customer satisfaction. The fusion of AI and manufacturing is more than a trend—it’s the future of personalization.

The AI Revolution in Pharma: Transforming Healthcare, One Algorithm at a Time

Thanks to Artificial Intelligence (AI) integration, the pharmaceutical industry is undergoing a seismic shift. Once considered futuristic, AI has become a driving force in drug discovery, patient care, and operational efficiency. Let’s explore ten critical aspects of how AI is revolutionizing pharma. 1. Accelerating Drug Discovery AI algorithms analyze vast datasets to identify potential drug candidates in a fraction of the time traditional methods require. By simulating molecular interactions, AI significantly reduces the trial-and-error process, expediting new treatments for conditions like cancer and rare diseases. 2. Personalized Medicine Gone are the days of one-size-fits-all treatments. AI enables pharma companies to develop personalized therapies by analyzing patient genetics, lifestyle, and medical history. This precision approach improves efficacy and minimizes side effects. 3. Predicting Clinical Trial Outcomes AI models can predict the success rates of clinical trials by analyzing historical data and identifying patient subsets most likely to benefit from the treatment. This reduces costs and increases the probability of regulatory approval. 4. Streamlining Supply Chains AI-driven logistics systems optimize the pharma supply chain by predicting demand, managing inventory, and minimizing waste. This ensures timely delivery of medications to patients, even during disruptions like pandemics. 5. Enhanced Drug Repurposing AI scours existing drug databases to identify new uses for old drugs. For instance, during the COVID-19 pandemic, AI identified potential treatments from drugs initially developed for other diseases, accelerating the availability of life-saving options. 6. Advancing Diagnostics AI-powered tools analyze medical imaging and patient data with unparalleled accuracy. In diagnostics, algorithms detect conditions like Alzheimer’s, cancer, and diabetes early, enabling timely intervention and better outcomes. 7. Revolutionizing Research and Development (R&D) By automating labor-intensive R&D processes, AI frees researchers to focus on innovation. Machine learning models identify promising molecular targets, ensuring research dollars are spent on viable leads. 8. Improving Pharmacovigilance AI monitors real-time adverse drug reactions, scanning social media, patient records, and clinical reports. This proactive approach enhances patient safety and ensures compliance with regulatory standards. 9. Transforming Patient Engagement Virtual health assistants and AI-powered apps are reshaping patient engagement. These tools provide reminders for medication adherence, answer health-related queries, and offer lifestyle advice tailored to individual needs. 10. Fostering Collaborative Ecosystems AI fosters partnerships between pharma companies, tech giants, and healthcare providers. Collaborative platforms share data and insights, driving innovation at an unprecedented pace and democratizing access to advanced therapies. Conclusion: A New Era in Pharma Innovation AI is more than a buzzword; it’s the catalyst for a new era in the pharmaceutical industry. From reducing drug development timelines to improving patient outcomes, AI’s impact is transformative. As technology evolves, the pharmaceutical sector must embrace this revolution to unlock its full potential and ensure a healthier future for all.

Willow: A Small Chip, A Quantum Leap for Computing

When Google announced “Willow,” its latest foray into quantum computing, the tech world listened. Compact yet powerful, this small chip signifies an incremental improvement and a seismic shift in how we think about computation. Willow represents a transformative leap forward that could redefine the limits of possibility across industries ranging from cryptography to artificial intelligence. A Small Chip with Big Ambitions At first glance, Willow doesn’t look like much. Measuring only a few millimeters across, it is deceptively simple in form. However, under the hood lies a revolutionary architecture designed to address one of quantum computing’s most pressing challenges: scalability. Leveraging advanced qubit stabilization technologies, Willow achieves unprecedented coherence times and error rates, paving the way for more reliable and practical quantum applications. What makes Willow particularly groundbreaking is its integration with classical computing frameworks. Google’s engineering team has developed proprietary algorithms allowing seamless interaction between quantum and classical processes. This hybrid approach eliminates many bottlenecks that have plagued quantum computing research, such as the inefficiencies in error correction and data transfer. Quantum Supremacy 2.0 Willow builds on the foundation of Google’s 2019 achievement of quantum supremacy—the moment when a quantum computer completed a calculation faster than the world’s best supercomputers. While that milestone was largely symbolic, Willow pushes the envelope further. Unlike its predecessors, it isn’t just faster; it’s practical. The chip is tailored for solving real-world problems, including optimizing supply chains, simulating complex molecular structures for drug development, and improving cryptographic systems. Implications for AI Artificial intelligence stands to gain immensely from Willow’s capabilities. Training state-of-the-art AI models often requires enormous computational resources, but quantum computing offers a shortcut. Willow’s architecture is optimized for tensor processing, enabling faster and more efficient model training. This could lead to breakthroughs in AI applications, from natural language understanding to autonomous systems. Moreover, Willow’s potential for speeding up machine learning optimization could help democratize AI by reducing costs, making advanced technologies accessible to smaller organizations and research institutions. Ethical and Security Considerations As with any technological leap, Willow raises important ethical and security questions. Quantum computing poses a threat to current cryptographic standards, potentially rendering existing encryption methods obsolete. Google has emphasized its commitment to “quantum-safe” encryption, but the broader implications for cybersecurity remain a pressing concern. Additionally, there is the risk of quantum technology widening the digital divide. Willow’s transformative potential could exacerbate inequalities if access to its capabilities is limited to tech giants and well-funded institutions. Ensuring that the benefits of quantum computing are equitably distributed will require careful planning and international cooperation. Looking Ahead Willow is more than just a chip; it is a bold statement about the future of computing. By making quantum technology more practical and accessible, Google is not merely advancing the field; it’s rewriting its rules. While challenges remain, Willow brings us closer to a world where quantum computing is not a distant promise but an everyday reality. As researchers, policymakers, and technologists grapple with the implications of this breakthrough, one thing is clear: Willow is not just a step forward for Google. It’s a giant leap for humanity’s computational frontier. \

Essam Heggy’s Research: Bridging Planetary Science and AI Innovation

Essam Heggy is a prominent planetary scientist with extensive experience exploring Earth and extraterrestrial environments. He has made groundbreaking contributions to understanding water resources, climate change, and planetary geology. His research spans terrestrial and Martian landscapes and has immense potential for advancing artificial intelligence (AI) solutions in critical fields. The Value of Heggy’s Research Dr. Heggy primarily focuses on subsurface imaging and water detection in arid regions. He has utilized advanced radar technologies to map underground water reservoirs in deserts, which is invaluable for addressing global water scarcity. By applying similar techniques to Mars, he has contributed to the search for water beneath the planet’s surface, a key step in humanity’s quest for extraterrestrial exploration and sustainability. Heggy’s interdisciplinary approach blends physics, geology, and data science. His research deepens our understanding of planetary processes and creates opportunities for innovation in how we model and interpret complex data systems. These insights are ripe for integration into AI-driven solutions. AI and the Heggy Effect Future Prospects Dr. Heggy’s pioneering work underscores the need for interdisciplinary collaboration in addressing global challenges. By integrating AI with planetary science, researchers can unlock novel solutions to pressing issues such as climate change, water scarcity, and space exploration. The fusion of Heggy’s expertise with cutting-edge AI has the potential to redefine how we interact with our planet—and beyond. As AI continues to evolve, the legacy of scientists like Essam Heggy will serve as a vital bridge between human ingenuity and machine intelligence, driving a new era of discovery and innovation.

Where Artificial Intelligence is Headed: Trends and Expectations for 2025 and Beyond

Artificial intelligence (AI) is no longer just a buzzword; it has become an indispensable part of our daily lives and a cornerstone of innovation across industries. As we approach 2025, AI continues to evolve at an unprecedented pace, unlocking possibilities and raising questions about the future of technology, business, and society. Here are the key trends and expectations shaping the trajectory of AI in the near and long term. 1. Generative AI Gains Prominence The explosion of generative AI tools like ChatGPT, DALL•E, and Stable Diffusion has demonstrated the creative potential of machine learning. By 2025, we can expect generative AI to move beyond text and image generation into more complex domains such as video editing, music composition, and real-time virtual environments. Businesses are already leveraging these tools to create personalized customer experiences, and this trend will only accelerate as generative AI becomes more accessible and capable. Key Developments: 2. Ethical and Responsible AI Takes Center Stage With great power comes great responsibility. As AI systems become more pervasive, the need for ethical frameworks and regulations is growing. Governments and organizations are working on establishing clear guidelines to ensure fairness, transparency, and accountability in AI applications. By 2025, expect significant strides in AI ethics, driven by collaborations between policymakers, technologists, and ethicists. Key Developments: 3. AI-Driven Automation Expands Across Industries From healthcare and finance to manufacturing and logistics, AI-driven automation is reshaping industries. By 2025, advancements in robotics, computer vision, and machine learning will lead to smarter automation solutions. These technologies will augment human capabilities rather than replace them, creating opportunities for more efficient workflows and enhanced decision-making. Key Developments: 4. AI and the Internet of Things (IoT) Converge The fusion of AI and IoT is set to unlock transformative possibilities. Smart devices, powered by real-time AI analytics, will become even more intuitive and adaptive. By 2025, smart cities, homes, and industries will rely heavily on AI to optimize energy use, reduce waste, and improve quality of life. Key Developments: 5. AI in Climate Tech Addressing climate change is one of humanity’s most pressing challenges, and AI is playing an increasingly critical role in this fight. By analyzing vast datasets and optimizing processes, AI is helping industries reduce emissions, improve energy efficiency, and develop sustainable solutions. In 2025 and beyond, AI will be instrumental in scaling renewable energy and advancing climate modeling. Key Developments: 6. AI Democratization Continues As AI tools become more user-friendly and affordable, businesses of all sizes and individuals will have greater access to this technology. Low-code and no-code AI platforms are making it possible for non-experts to build and deploy AI solutions. This democratization will fuel innovation across sectors and geographies, empowering startups and entrepreneurs to compete on a global stage. Key Developments: Looking Ahead The rapid advancements in AI come with both opportunities and challenges. As we embrace the transformative potential of AI, it is crucial to address concerns around privacy, security, and societal impact. Collaboration between governments, businesses, and researchers will be essential to shape a future where AI serves as a force for good. By 2025 and beyond, AI will not just be a tool but a catalyst for reimagining what’s possible in our increasingly interconnected world. The question is not whether AI will transform the way we live and work, but how we can harness its potential responsibly to create a better future for all.

The Photonic Breakthrough Shaping the Future of AI

Dr. Elham Fadaly’s groundbreaking research in photonics and semiconductor physics has transformed optoelectronics and paved the way for revolutionary advancements in artificial intelligence (AI). Her work on achieving direct bandgap emission in silicon-germanium alloys—a feat once deemed nearly impossible—marks a milestone in technology with profound implications for the future of AI systems. The Benefits of Dr. Fadaly’s Research 1. Speeding Up Data Processing with Light Dr. Fadaly’s innovation in creating light-emitting silicon-based materials enables the seamless integration of photonic components on traditional silicon chips. This breakthrough allows light—r rather than electricity to transfer data within processors. Optical data transfer is orders of magnitude faster and more efficient, promising a dramatic increase in the speed of AI computations. 2. Energy-Efficient AI AI systems are notorious for their energy consumption, especially during the training of large-scale machine learning models. By enabling efficient light emission from silicon, her research contributes to developing photonic computing systems that consume significantly less power. The energy efficiency of these systems is a game-changer for sustainable AI development, particularly as global AI applications scale exponentially. 3. Compact and Scalable AI Hardware Traditionally, optoelectronic systems have relied on expensive and less scalable materials like gallium arsenide. By harnessing silicon—a material already central to modern electronics—Dr. Fadaly’s work ensures that photonic advancements can be integrated into existing manufacturing processes. This opens the door to creating more minor, affordable AI hardware capable of efficiently handling complex computations. 4. Enabling Real-Time AI Decision-Making Wi systems powered by silicon photonics can achieve real-time decision-making capabilities. With faster data transfer and processing speeds, This is especially crucial for applications such as autonomous vehicles, robotic surgery, and financial market predictions, where even microseconds of delay can have significant consequences. 5 Ridging AI and Quantum Computing Integrating efficient photonic components into AI systems could accelerate the convergence of AI and quantum computing. Photonic systems are vital for quantum communication and computation, and Dr. Fadaly’s work lays the groundwork for future quantum-AI architectures that promise unparalleled computational power. Shaping the Future of AI Solutions Dr. Fadaly’s innovations are setting the stage for a new era of AI hardware, where photonic computing and AI converge to unlock unprecedented possibilities. Here’s how her research is influencing the evolution of AI solutions: Photonic Neural Networks Photonic circuits can process massive amounts of data simultaneously, mimicking the parallelism of biological neural networks. This capability is critical for AI tasks involving complex pattern recognition, such as natural language processing, image analysis, and autonomous decision-making. Accelerated Machine Learning By leveraging photonics for matrix multiplication—an operation central to training AI models—Dr. Fadaly’s work enables faster and more efficient neural network training. His acceleration is crucial for industries relying on AI for innovations, from drug discovery to climate modeling. AI at the Edge Silicon-based photonics’ scalability and energy efficiency make it ideal for edge AI applications, where computing must be performed directly on devices like smartphones, drones, and IoT sensors. This will enable faster, localized decision-making without relying on cloud-based servers. A Legacy of Impact Dr. Elham Fadaly’s research demonstrates the transformative power of combining fundamental science with technological innovation. By unlocking the potential of silicon photonics, she is addressing long-standing challenges in optoelectronics and shaping the foundation of AI’s future. As AI continues to permeate every aspect of human life, integrating discoveries into mainstream technology will undoubtedly lead to more innovative, faster, and more sustainable AI solutions—cementing her legacy as a pioneer at the intersection of photonics and artificial intelligence.

10 AI Predictions For 2025

As we approach 2025, artificial intelligence (AI) is no longer a futuristic concept but a transformative force shaping industries, societies, and economies. With rapid advancements in AI technology, the landscape is evolving faster than ever. From breakthroughs in natural language processing to ethical concerns around autonomous systems, here are 10 predictions for how AI will redefine our world by 2025. 1. AI Will Achieve Human-Level Language Understanding The days of clunky, robotic interactions with AI are over. By 2025, large language models will exhibit near-human comprehension and conversational ability. These systems will power more nuanced customer service bots, real-time translation tools, creative content creation, journalism, and entertainment applications. 2. Generative AI Will Dominate Creative Industries Generative AI tools will redefine creativity in advertising, film, gaming, and design. Expect hyper-personalized marketing campaigns and movies partially or fully scripted by AI. This will lead to debates about intellectual property and the role of human creators in a world where machines can innovate. 3. AI Will Reshape Healthcare Delivery AI-powered diagnostics and personalized medicine will become mainstream, reducing healthcare costs and improving patient outcomes. Predictive models will enable early detection of diseases like cancer, while AI-driven drug discovery will fast-track treatments for rare and chronic illnesses. 4. Autonomous Vehicles Will Gain Mass Adoption By 2025, fully autonomous cars, trucks, and drones will become a common sight in urban and suburban areas. These technologies will revolutionize logistics and transportation, though regulators and developers will face ongoing challenges around safety and ethical AI decision-making. 5. AI-Driven Cybersecurity Will Outpace Hackers The arms race between cybercriminals and security experts will intensify. AI-driven security systems will predict and neutralize threats before they occur, offering organizations and individuals unprecedented digital protection. 6. AI Ethics Will Become a Global Priority With AI’s increasing influence, ethical concerns will reach critical mass. Governments and international organizations will establish stricter regulations for AI transparency, bias mitigation, and accountability, ensuring fair and ethical use across industries. 7. AI Will Revolutionize Education AI tutors and adaptive learning platforms will make personalized education accessible globally. From virtual classrooms to augmented reality lessons, AI will bridge educational gaps, providing high-quality learning experiences tailored to each student’s student’s 8. AI Will Power the Next Industrial Revolution Manufacturing and supply chains will embrace AI unprecedentedly, from predictive maintenance in factories to fully automated warehouses. This revolution will improve efficiency but may also raise concerns about workforce displacement and reskilling. 9. AI in Climate Change Solutions AI will play a pivotal role in combating climate change. From optimizing renewable energy grids to monitoring deforestation and carbon emissions, AI-driven insights will guide global sustainability initiatives. 10. The Rise of Human-AI Collaboration Far from replacing humans, AI will increasingly augment human capabilities. AI will collaborate in creative industries, scientific research, and day-to-day productivity, enhancing our ability to solve complex problems and innovate.

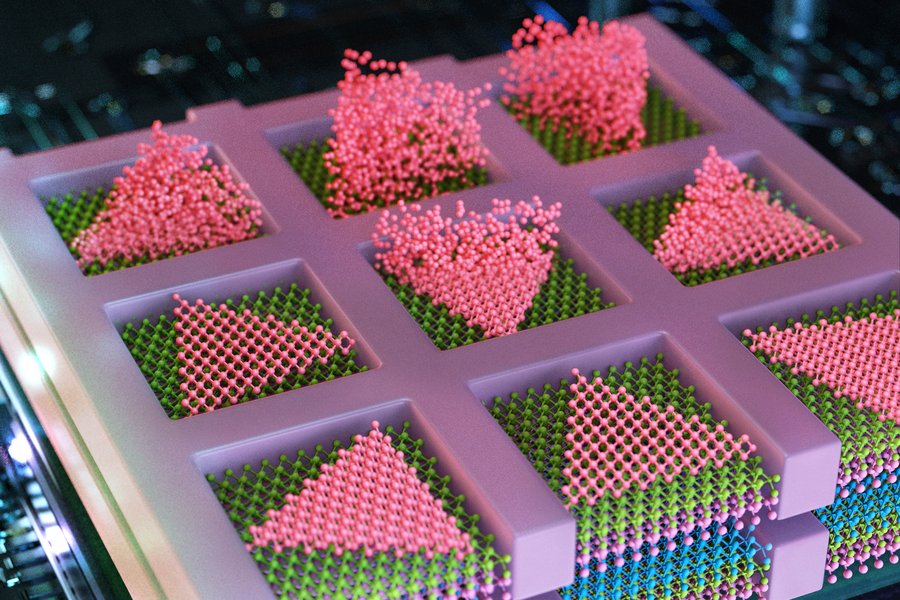

AI, MIT engineers grow “high-rise” 3D chips

AI, The electronics industry has long relied on the principle of packing ever-smaller transistors onto the surface of a single computer chip to achieve faster and more efficient devices. However, as we approach the physical limits of this strategy, chip manufacturers are exploring new dimensions — literally. Rather than expanding outwards, the industry is now looking upwards. The idea is to stack multiple layers of transistors and semiconducting elements, transforming chip designs from sprawling ranch houses to high-rise towers. These multilayered chips promise exponentially greater data processing capabilities and the ability to perform more complex functions than today’s electronics. Overcoming the Silicon Barrier One of the biggest challenges in developing stackable chips is the foundational material. Silicon wafers, currently the go-to platform for chip manufacturing, are thick and bulky. Each layer of a stacked chip built on silicon requires additional silicon “flooring,” which slows communication between layers. Now, engineers at MIT have developed a revolutionary solution. In a recent study, the team unveiled a multilayered chip design that eliminates the need for silicon wafer substrates. Even better, their approach works at low temperatures, preserving the delicate circuitry of underlying layers. This new method allows high-performance semiconducting materials to be grown directly on each other, without requiring silicon wafers as a scaffold. The result? Faster and more efficient communication between layers and a significant leap forward in computing potential. A New Method for a New Era The breakthrough leverages a process developed by the MIT team to grow high-quality semiconducting materials, specifically transition-metal dichalcogenides (TMDs). TMDs, a type of 2D material, are considered a promising successor to silicon due to their ability to maintain semiconducting properties at atomic scales. Unlike silicon, whose performance degrades as it shrinks, TMDs hold their own. Previously, the team grew TMDs on silicon wafers using a patterned silicon dioxide mask. Tiny openings in the mask acted as “seed pockets,” encouraging atoms to arrange themselves in orderly, single-crystalline structures. However, this process required temperatures around 900°C, which would destroy a chip’s underlying circuitry. The team fine-tuned this method in their latest work, borrowing insights from metallurgy. They discovered that nucleation — the initial formation of crystals — requires less energy and heat when it begins at the edges of a mold. Applying this principle, they managed to grow TMDs at temperatures as low as 380°C, preserving the integrity of the circuitry below. Building Multilayered Chips Using this low-temperature process, the researchers successfully fabricated a multilayered chip with alternating layers of two TMDs: molybdenum disulfide (ideal for n-type transistors) and tungsten diselenide (suitable for p-type transistors). These materials were grown in single-crystalline form, directly on each other, without silicon wafers in between. This method effectively doubles the density of semiconducting elements on a chip. Furthermore, it enables the creation of metal-oxide-semiconductor (CMOS) structures—the building blocks of modern logic circuits—in a true 3D configuration. Unlike conventional 3D chips, which require drilling through silicon wafers to connect layers, this growth-based approach allows for seamless vertical alignment and higher yields. A Future of AI-Powered Devices The potential applications of this technology are groundbreaking. The team envisions using these stackable chips to power AI hardware for laptops, wearables, and other devices, delivering supercomputer-level performance in a compact form. Such chips could also store massive amounts of data, rivaling the capacity of physical data centers. “This breakthrough opens up enormous potential for the semiconductor industry,” says Jeehwan Kim, associate professor of mechanical engineering at MIT and senior author of the study. “It could lead to orders-of-magnitude improvements in computing power for AI, logic, and memory applications.” From Concept to Commercialization Kim has launched a startup called FS2 (Future Semiconductor 2D materials) to scale up and commercialize this innovation. While the current demonstration is at a small scale, the next step is to develop fully operational AI chips using this technology.