Robotics has always faced a towering challenge: generalization. Building machines that can adapt to unpredictable environments without hand-holding has stymied engineers for decades. Since the 1970s, the field has evolved from rigid programming to deep learning, teaching robots to mimic and learn directly from human actions. Yet, even with this progress, one major bottleneck remains—data.

Robots need more than just mountains of data; they need high-quality, edge-case scenarios that push them beyond their comfort zones. Traditionally, this kind of training has required human oversight, with operators meticulously designing scenarios to challenge robots. But as machines grow more sophisticated, this hands-on approach becomes impractical. Simply put, we can’t produce enough training data to keep up.

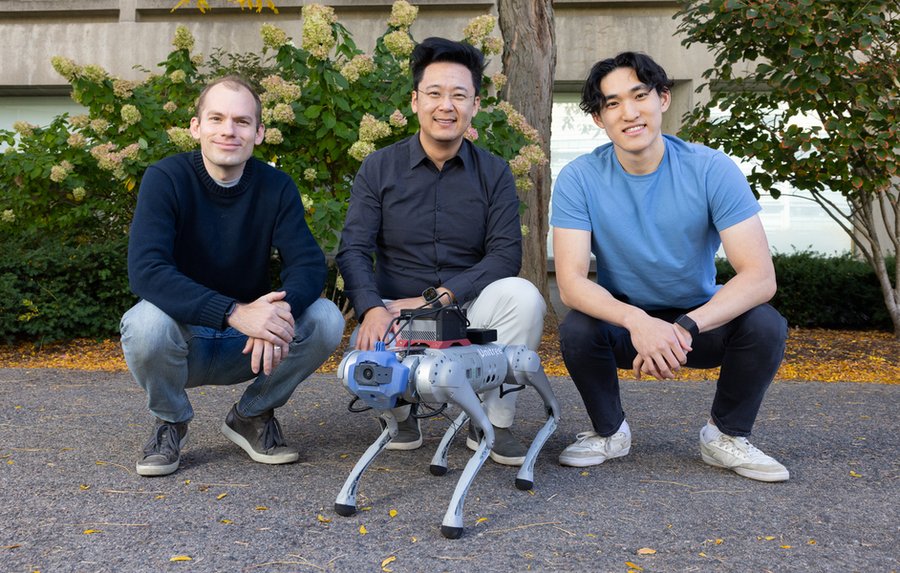

Enter LucidSim, a new system MIT’s Computer Science and Artificial Intelligence Laboratory (CSAI developedL). It leverages generative AI and physics simulators to create hyper-realistic virtual environments where robots can train themselves to master complex tasks—without touching the real world.

What Makes LucidSim a Game Changer?

At its core, LucidSim addresses one of robotics’ biggest hurdles: the “sim-to-real gap”—the divide between training in a simplified simulation and performing in the messy, unpredictable real world.

“Previous approaches often relied on depth sensors or domain randomization to simplify the problem, but these methods missed critical complexities,” explains Ge Yang, a postdoctoral researcher at CSAIL and one of LucidSim’s creators.

LucidSim takes a radically different approach by combining physics-based simulations with the power of generative AI. It generates diverse, highly realistic visual environments, thanks to an interplay of three cutting-edge technologies:

- Large Language Models (LLMs): These models generate structured descriptions of environments, which provide the foundation for visual simulations.

- Generative AI Models transform textual descriptions into rich, photorealistic images.

- Physics Simulations: To ensure realism, a physics engine governs how objects interact within the generated scenes, grounding them in real-world dynamics.

Dreams in Motion: A Virtual Twist

One particularly innovative feature of LucidSim is its “Dreams In Motion” technique. While previous generative AI models could produce static images, LucidSim goes further by generating short, coherent videos.

Here’s how it works: The system calculates pixel movements between frames, warping a single generated image into a multi-frame sequence. This approach considers the 3D geometry of the scene and the robot’s shifting perspective, creating a series of “dreams” that robots can use to practice tasks like locomotion, navigation, and manipulation.

This method outperforms traditional techniques like domain randomization, which applies random patterns and colors to objects in simulated environments. While domain randomization creates diversity, it lacks the realism that LucidSim delivers.

From Burritos to Breakthroughs

Interestingly, LucidSim’s origins trace back to a late-night brainstorming session outside Beantown Taqueria in Cambridge, Massachusetts.

“We were debating how to teach vision-equipped robots to learn from human feedback but realized we didn’t even have a pure vision-based policy to start with,” recalls Alan Yu, an MIT undergraduate and LucidSim’s co-lead author. “That half-hour on the sidewalk changed everything.”

From those humble beginnings, the team developed a framework that not only generates realistic visuals but scales training data creation exponentially. By sourcing diverse text prompts from OpenAI’s ChatGPT, the system generates a variety of environments, each designed to challenge the robot’s abilities.

Robots Becoming the Experts

To test LucidSim’s capabilities, the team compared it to traditional methods where robots learn by mimicking expert demonstrations. The results were striking:

- Robots trained by human experts succeeded only 15% of the time, even after quadrupling the training data.

- Robots trained with LucidSim’s generated data achieved an 88% success rate, doubling their dataset size to achieve dramatic improvements.

“And the trend is clear,” says Yang. “With more generated data, the performance keeps improving. Eventually, the student outpaces the expert.”

Beyond the Lab

While LucidSim’s initial focus has been on quadruped locomotion and parkour-like tasks, its potential applications are far broader. One promising avenue is mobile manipulation, where robots handle objects in open environments.

Currently, such robots rely on real-world demonstrations, but scaling this approach is labor-intensive and costly. “By moving data collection into virtual environments, we can make this process more scalable and efficient,” says Yang.

Stanford University’s Shuran Song, who was not involved in the research, highlights the framework’s broader implications. “LucidSim provides an elegant solution to achieving visual realism in simulations, which could significantly accelerate the deployment of robots in real-world tasks.”

Paving the Way for the Future

From a sidewalk in Cambridge to the forefront of robotics innovation, LucidSim represents a leap forward in creating adaptable, intelligent machines. Its combination of generative AI and physics simulation could redefine how robots learn and interact with the real world.

Supported by a mix of academic and industrial funding—from the National Science Foundation to Amazon—the MIT team presented their groundbreaking work at the recent Conference on Robot Learning (CoRL).

LucidSim doesn’t just help robots dream—it helps them learn to navigate our complex, dynamic world without ever stepping into it. Could this be the future of robotics? If the results so far are any indication, the answer is a resounding yes.